1. What is Memory ?

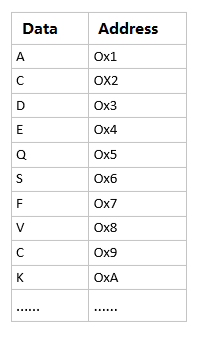

Memory is composed of an infinite number of memory cells, with each cell having a fixed address known as the memory address. This memory address is unique and permanently bound to the memory cell, meaning that it always corresponds to that particular cell.

The commonly used representation of memory addresses is in hexadecimal notation, which is denoted as “0x” followed by a hexadecimal number.

As shown in the diagram, it is clear that each segment of memory data has a corresponding address. It is because of the existence of these addresses that we can easily access data in the computer’s memory.

2. Memory Addressing Method

2.1 In logic, memory is a series of cells that can be used to store data (binary bits stored in memory). Each cell is assigned a unique number, which is the memory address. The memory address (a number) and the physical space of the cell are permanently bound and correspond to each other. This is the memory addressing method.

2.2. During program execution, the CPU in the computer only recognizes memory addresses and does not care about where the corresponding physical space is located or how it is distributed. The hardware design guarantees that using an address will always lead to the corresponding cell, so the two concepts of memory cells, the address and the physical space, are two aspects of the same thing.

2. Relationship Between Memory and Data Types

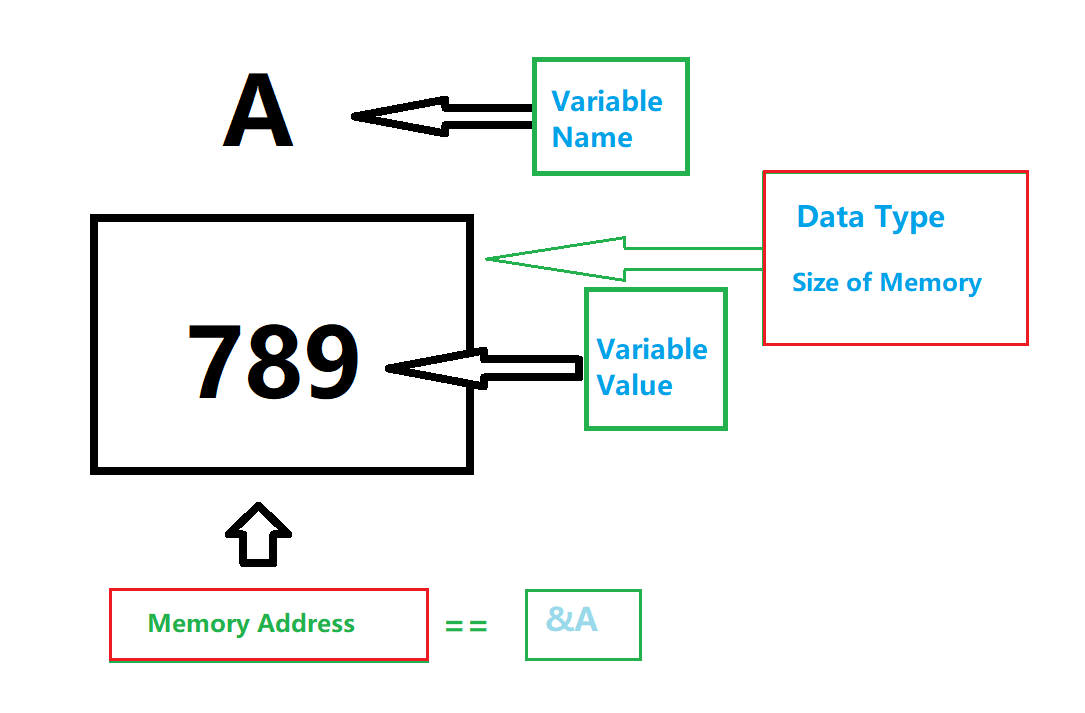

Data types are used to define variables, which need to be stored and operated on in memory. Therefore, data types must match the memory to achieve the best performance, otherwise they may not work or be inefficient.

In a 32-bit system, it is best to use int to define variables because it is efficient. This is because the 32-bit system itself is matched with memory, which is also 32-bit, making it inherently suitable for defining 32-bit int type variables for the highest efficiency. It is also possible to define 8-bit char type variables or 16-bit short type variables, but their actual access efficiency is not high.

In many 32-bit environments, we actually use int to implement bool type variables (which only need 1 bit). That is to say, when we define a bool b1, the compiler actually allocates 32 bits of memory to store this bool variable b1. The compiler’s approach actually wastes 31 bits of memory, but the benefit is that it is efficient.

Question: Should we prioritize saving memory or running efficiency when programming in practice? The answer is indefinite and depends on the specific situation. Many years ago, when memory was expensive and machines had very little memory, code was written primarily to save memory. Now, with the development of semiconductor technology, memory has become very cheap, and most machines are high-end, so saving a little memory is not important. Efficiency and user experience have become critical. Therefore, most programming nowadays prioritizes efficiency.